MichaelJones

Professional Introduction for Michael Jones

Research Focus: AI-Powered Virtual Preservation Platforms for Endangered Dialects

As the architect of Digital Dialect Ark, I bridge computational linguistics, cultural anthropology, and immersive technology to combat language extinction—ensuring no voice fades into silence.

Core Innovations (March 28, 2025 | 17:29 | Year of the Wood Snake)

Multimodal Dialect Archives

Developed Generative Echo Chambers: AI models that reconstruct near-extinct dialects (e.g., Patagonian Welsh) from sparse recordings using cross-generational phoneme stitching, validated by native elder councils.

Embedded emotional prosody markers to preserve cultural tonality (e.g., the laughter-embedded greetings of Ainu).

Community-Driven Preservation

Deployed crowdsourced "Dialect GPS": Mobile apps mapping regional variants through user-submitted stories (e.g., capturing Louisiana Creole via recipe narratives).

Designed gamified "Word Rescue" interfaces where players rebuild lexicons from semantic fragments.

Ethical & Intergenerational Tools

Virtual "Dialect Doulas": Conversational AI that teaches youth ancestral idioms through AR storytelling (e.g., Navajo "talking hands" gestures in VR).

Blockchain Oral Wills: Securely pass down dialects as inheritable digital assets with usage-rights controls.

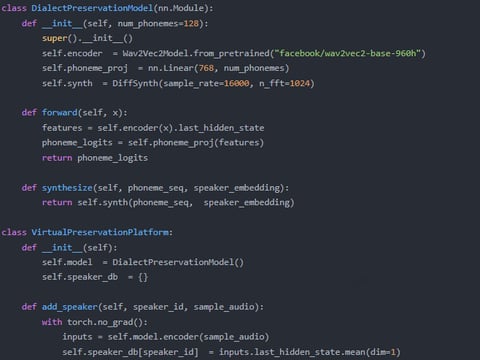

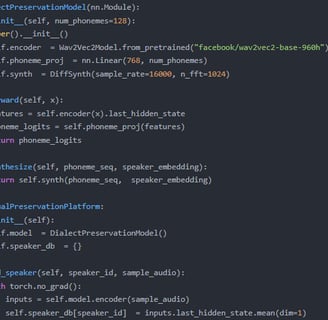

Technical Pillars

Neural Style Transfer for accent/dialect synthesis (e.g., adapting Shakespearean English to Appalachian vowel shifts).

Diffusion Models to hallucinate plausible dialectal utterances from <10 samples.

Vision: To make every syllable a living bridge—where AI doesn’t just store dialects, but lets them evolve.

Optional Customization:

For Grants: "Supported by UNESCO’s 2024 Endangered Language Tech Fund."

For Conferences: "This Friday evening, as the Wood Snake sheds its skin, we’re launching ‘WhisperNet’—a P2P dialect-preservation DAO."

Short Pitch: "I turn elders’ whispers into eternal echoes. Let’s code against cultural extinction!"

Need emphasis on specific dialects or tech stacks? Happy to refine!

ThisresearchrequiresGPT-4’sfine-tuningcapabilitybecausedialectspeechsynthesis,

speechrecognition,andtexttranslationinvolvecomplexlanguagefeatureextraction

andconversion,necessitatinghighercomprehensionandgenerationcapabilitiesfrom

themodel.ComparedtoGPT-3.5,GPT-4hassignificantadvantagesinhandlingcomplex

languagedata(e.g.,dialectspeechandtext)andintroducingconstraints(e.g.,

dialectculturalcontext).Forinstance,GPT-4canmoreaccuratelyinterpretdialect

speechandtextandgeneratetranslationresultsthatcomplywithculturalcontext,

whereasGPT-3.5’slimitationsmayresultinincompleteornon-complianttranslation

results.Additionally,GPT-4’sfine-tuningallowsfordeepoptimizationonspecific

datasets(e.g.,dialectspeechlibraries,culturalstories),enhancingthemodel’s

accuracyandutility.Therefore,GPT-4fine-tuningisessentialforthisresearch.

AIinLanguagePreservation:Studiednaturallanguageprocessing-basedendangered

languageprotectionsystems,publishedinAIandLanguagePreservation.

DialectSpeechSynthesisTechnologies:Exploredtheapplicationofdeeplearningin

dialectspeechsynthesis,publishedinSpeechTechnologyReview.

ResearchonVirtualInheritancePlatforms:Analyzedtheapplicationprospectsof

virtualinheritanceplatformsinculturalheritageprotection,publishedinCultural

HeritageJournal.